AI-Generated sound therapy for critically ill patients

At the start of 2022, I joined Ingenium – Canada’s Museums of Science and Innovation as a research assistant. Ingenium curators Dr. Tom Everrett (Communications) and Dr. David Pantalony (Physical Sciences and Medicine) invited me to write about a research project that I am currently affiliated with called Autonomous Adaptive Soundscape (AAS).

The AAS is an intelligent bio-algorithmic system that selects therapeutic soundscapes to relax ICU patients, via application of machine learning and autonomic biosignals. The system uses sensors to monitor how an ICU patient is feeling, and then delivers therapeutic soundscapes to match.

The AAS project is funded by the Canadian New Frontiers in Research Fund, the Alberta Machine Intelligence Institute, and by a Pilot Seed Grant from the University of Alberta Office of VP Research. The project is led by Professor of Music Michael Frishkopf (Principal Investigator), with Professors Martha Steenstrup, Abram Hindle, Osmar Zaiane, and Michael Cohen (Computer Science, University of Aizu, Japan), Elisavet Papathanassoglou (Nursing), and Demetrios James Kutsogiannis (Critical Care Medicine), along with a team of researchers and participants from diverse backgrounds. The project draws on several research fields including Sound Studies, Sound Therapy, Composition and Sound Design, Machine Learning, Critical Care, Nursing, and Rehabilitation Medicine.

Critically ill patients often experience significant levels of mental and physical stress. Anxiety, mood disorders, sleep deprivation, and delirium related to stress are common. Sound and music therapy is a non-invasive and low-cost method to relieve stress with very limited side effects.

Compared to music therapists, who are not continuously available, AAS has the potential to provide a diverse range of sound and music treatments – tailored to the specific needs of each patient – and is available constantly and around the clock.

The basic idea of the AAS is a three-point feedback loop, connecting a patient, an AI machine learning agent, and a sound generator. The AI agent interprets in real time the patient’s current stress level by means of a biosensor measuring autonomic signals, such as pulse rate or galvanic skin response. If stress appears to be increasing, the AI agent can then signal the sound generator to try a new soundscape. The patient then hears these soundscapes, completing the loop.

The key technological components thus involve biosensors, which measure the body and produce biosignals; an interpreter, which analyzes biosignals and produces environment and reward signals; a learning component, which uses environment and reward signals to select a soundscape; and a soundscape generator, which selects, filters, or combines selected sounds from a sound library.

In this way, AAS operates on its own as an adaptive and automatic sound generator that can function 24 hours a day, 7 days a week. No active or conscious engagement with the system is needed from the patient, though an “off” switch is provided for patients and health care providers.

The project team is composed of a diverse group including academics, graduate students, health professionals, and volunteers. As a team member, part of my role is to curate a global soundscape library with Greg Mulyk, another team member who is a sound designer and programmer.

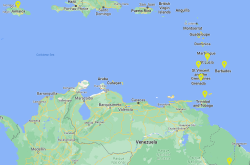

Since joining the AAS team in March 2020, I have collected more than 600 soundscapes from all over the world. This sound library includes natural sounds, musical sounds, speech, environmental noises, and synthetic sounds.

Greg and I have cataloged the collected sounds according to geographical and cultural areas, sonic properties, and environmental types such as biomes for natural sounds.

Since patients come from various cultural, ethnic, and geographical backgrounds, and suffer from varied medical conditions, different patients may respond best to different soundscapes, and their preferred relaxing sounds may also vary over time.

The most suitable soundscapes for a specific patient depend on factors such as the patient’s health status, linguistic and demographic profile, and listening preferences.

Soundscapes that work for some patients may also become repetitive over time, in which case the AI agent will interpret and select another sound set based on predictions from biosignal responses.

Based on such possibilities, Greg and I attempt to build a soundscape library that is as diverse as possible in many aspects, in order to draw on a wide selection of sonic types and sources. The collection features a wide range of natural sounds that represent various biomes (e.g., beaches, forests, lakes) and places (e.g., countries, continents, and latitudes). We also included musical sounds such as singing bowls, and folk music from various regions worldwide. All the soundscapes collected are then edited and processed into seamless loops ready for play by the AAS sound generator. After editing, soundscapes are steady, relaxing, and without noticeable sonic events.

Some of the raw, unedited soundscapes are currently reflected on an evolving global sound map. You can learn more about the AAS project here.

Enjoying the Ingenium Channel? Help us improve your experience with a short survey!